IDS for (PCI Compliance) Google Cloud Platform

- Panot Thaiuppathum

- Compliance , Google

- 16 Feb, 2021

If you design a cloud infrastructure for PCI Compliance solution like any website that process credit card payment directly to a merchant bank not via 3rd party payment gateway. Payment gateway website or any website that needs to restore customer’s credit card info which is a very sensitive data. These all websites have to be PCI Compliance. More information can be found here but you can also google, there are a bunch of rules to comply. I would not go into detail here as it is a huge topic.

If you wish to find a completed template provide by a cloud provider as a boilerplate infrastructure, unfortunately I couldn’t find one at the present time I write this. However there are some useful projects available with the power of IaaS tools like Terraform, AWS Cloudformation and Azure Blueprints so at least you don’t have to start from zero. https://github.com/GoogleCloudPlatform/pci-gke-blueprint should be one of the very top of the search result. It comes with a good sample of separating projects and subnets for in-scope and out-of-scope together with firewall rules and concept of microservices host on Kubernetes on Google Cloud (GKE) etc. It already covers quite many rules of the PCI DSS but one of the missing piece is PCI DSS Requirement 11

PCI DSS requirement 11.4 states that you must implement Intrusion Detection Systems (IDS) or Intrusion Prevention Systems (IPS) and other critical continuous detective checks around the Internet and CDE entry points.

To fill this gap, we just have to have IDS in place within our infrastructure to detect suspicious traffic of our in-scope subnet but how…?

At least cloud providers like AWS and Google Cloud have VPC Packet Mirroring feature available to use. Both work pretty similar - mirror all or some traffics (per specified filter) from a source subnet / network tag / specific instance to the collector destination which basically an internal load balance. I assume you already know what is VPC otherwise it is another important fundamental of cloud infrastructure to learn.

Then behind the internal load balancer, we can have a VM that run one of open source IDS. Configure the IDS rules as needed and output the suspicious traffic to the log file. That should be it.

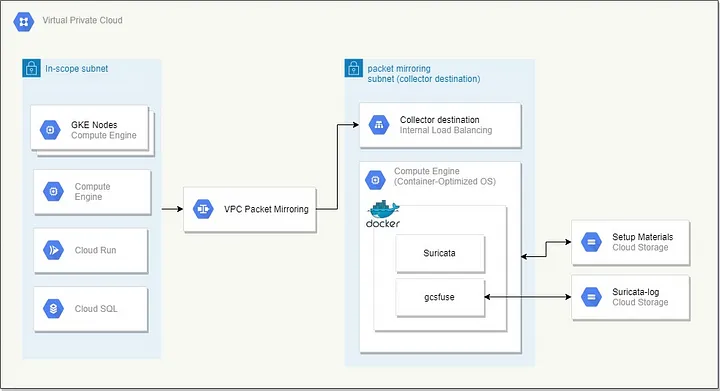

Per proposed solution said above, for pci-gke-blueprint project as a base infrastructure, we should have something like in below diagrams.

Overview of IDS with Suricata and gcsfuse on Google Cloud Platforms via Packet Mirrorring

Overview of IDS with Suricata and gcsfuse on Google Cloud Platforms via Packet Mirrorring

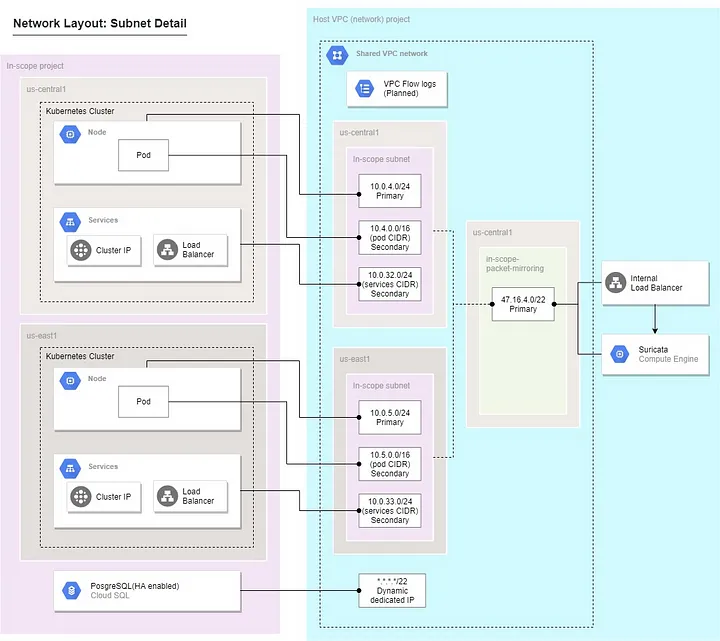

Network diagram after adding Pocket Mirroring and Suricata Instance

Network diagram after adding Pocket Mirroring and Suricata Instance

Note that I picked Suricata as the IDS. It is open-source and mature.

To accomplish this, below is implementation in action.

- Using pci-gke-blueprint project as a based infrastructure with some modifications to support multiple regions/clusters. This is actually optional, the solution below works with any kind of services as long as it is under the VPC that has packet mirroring feature support.

- Using VPC Packet Mirroring to mirror from in-scope subnet to Internal Load Balancer on network project.

Created VPC Packet Mirroring

Created VPC Packet Mirroring

- Create a new Google Cloud Storage Bucket and upload the

setup.shfile — suricata-gcsfuse/setup.sh at main · panot-hong/suricata-gcsfuse (github.com)

#! /bin/bash

set -e

set -x

SURICATA_CONTAINER_NAME="suricata-gcsfuse"

SURICATA_IMAGE="panot/suricata-gcsfuse:6.0"

# COS protects /root/ as read-only while docker-credential-gcr configure-docker will attempt to create /root/.docker folder for docker configuration

# hence it will fail instead we override the home directory of root user

# https://stackoverflow.com/a/51237418/6837989

HOME_ROOT="/home/root"

HOME_ROOT_OVERRIDE="sudo HOME=${HOME_ROOT}"

# Authenticating with Private Google Container Registry For COS Instance

${HOME_ROOT_OVERRIDE} docker-credential-gcr configure-docker

# Unmount if it is previously mounted

fusermount -u /var/log/suricata || true

if [ ! "$(docker ps -q -f name=${SURICATA_CONTAINER_NAME})" ]; then

if [ "$(docker ps -aq -f status=exited -f name=${SURICATA_CONTAINER_NAME})" ]; then

# cleanup

${HOME_ROOT_OVERRIDE} docker rm ${SURICATA_CONTAINER_NAME}

fi

# download service account key file of suricata svc to /home/root/

mkdir -p /etc/gcloud

curl -X GET \

-H "Authorization: Bearer $(curl --silent --header "Metadata-Flavor: Google" \

http://metadata.google.internal/computeMetadata/v1/instance/service-accounts/default/token | grep -o '"access_token":"[^"]*' | cut -d'"' -f4)" \

-o "/etc/gcloud/service-account.json" \

"https://www.googleapis.com/storage/v1/b/[bucket name]/o/[service account to run gcsfuse.json]?alt=media"

${HOME_ROOT_OVERRIDE} docker run --privileged -d --restart=on-failure:5 --name ${SURICATA_CONTAINER_NAME} \

--net=host --cap-add=net_admin --cap-add=sys_nice \

-v /var/log/suricata:/var/log/suricata -v /etc/gcloud:/etc/gcloud \

-e GCSFUSE_BUCKET=[your suricata log bucket name] -e GCSFUSE_ARGS="--limit-ops-per-sec 100" -e GOOGLE_APPLICATION_CREDENTIALS=/etc/gcloud/service-account.json \

-e SURICATA_OPTIONS="-i eth0 --set outputs.1.eve-log.enabled=no --set stats.enabled=no --set http-log.enabled=yes --set tls-log.enabled=yes" \

${SURICATA_IMAGE}

# remove stream-events rules from the suricata rules as it has many false positive alerts

${HOME_ROOT_OVERRIDE} docker exec -t ${SURICATA_CONTAINER_NAME} suricata-update --ignore stream-events.rules --ignore "*-deleted.rules"

fi-

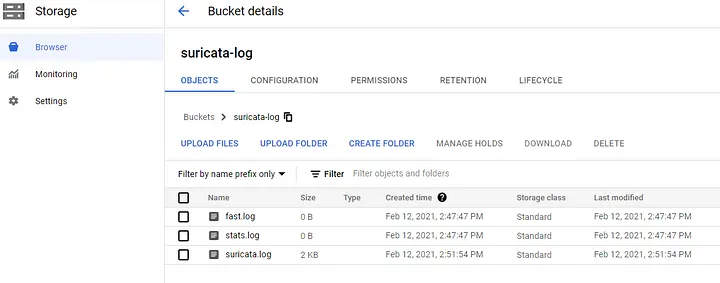

Create another Google Cloud Storage bucket to store suricata log files.

-

Go to IAM to create a new service account with permission to read/write to the bucket. Export service account key file that has access to the suricata log file bucket above and upload to the same bucket where we keep the setup.sh.

-

Replace name of both buckets and service account file name. All are wrapped with [] in the setup.sh file.

-

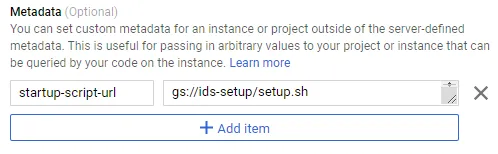

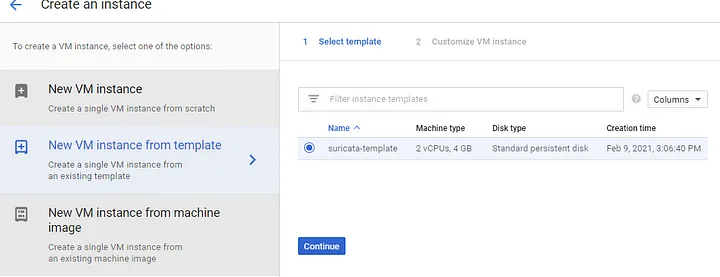

Create VM Instance template with Container-Optimized OS | Google Cloud and set metadata with startup-script-url with value point to the uploaded setup.sh file in the previous step.

Set startup script to use from Google Cloud Storage Bucket that previously uploaded

- Create Instance Group from created Instance Template above. Create Instance Group instead of directly create a VM to ensure at least one instance running. This way we can also scale number of VM instance if needed. The instance is designed to be stateless by running Suricata in the docker container within the Container-Optimized OS and Suricata’s log files are written to the Google Cloud Storage bucket via gcsfuse. The magic of making Suricata and gcsfuse work together is by using suricata-gcsfuse container image.

Create instance group from created instance template

Create instance group from created instance template

That’s all, if you setup everything correctly, you should see Suricata’s log files appear in the bucket that we created to store Suricata’s log.

Suricata’s log files appear in the bucket after the VM booted for a couple seconds

Suricata’s log files appear in the bucket after the VM booted for a couple seconds

FAQ

Q: Why don’t we just create a VM with plain Linux OS and manually configure Suricata, why bother using Container-Optimized OS?

A: Container-Optimized OS implements restriction and security, you cannot install anything on the Container-Optimized OS host but you can have unlimited number of containers as its resource allow.

Q: Why do we need to keep Suricata’s log files within the Google Cloud Storage bucket instead of keeping in the stateful disk of the VM?

A: Normally PCI DSS strongly prefers not to allow SSH to any instance within the network. That’s mean without SSH, there is no way to see the log files. Some PCI auditors may provide a tool to install on the VM that feed IDS log file to their monitor tool but don’t you want to see the suspicious requests to your resources?

Q: Why do we need to upload the service account key file which has permission to read/write the Suricata’s log bucket?

A: The Container-Optimized OS and other VM OS actually have default credential against Google Cloud which belong to the service account that run the VM. However we run gcsfuse within the container not on the VM itself, so we need the service account key file in the suricata-gcsfuse container. This is not the best practice but persist service account key file within the image is not an option either.